Evaluating models

Lecture 22

Duke University

STA 199 - Fall 2025

November 13, 2025

Warm-up

While you wait: Participate 📱💻

What is sensitivity also known as?

- True positive rate

- True negative rate

- False positive rate

- False negative rate

- Recall

Scan the QR code or go to app.wooclap.com/sta199. Log in with your Duke NetID.

Announcements

Projects due tonight, peer evals due tomorrow night

Practice Exam 2 is posted on the course website

Reply to post on Ed about requests for topics / concepts for exam review [thread]

From last class: Participate 📱💻

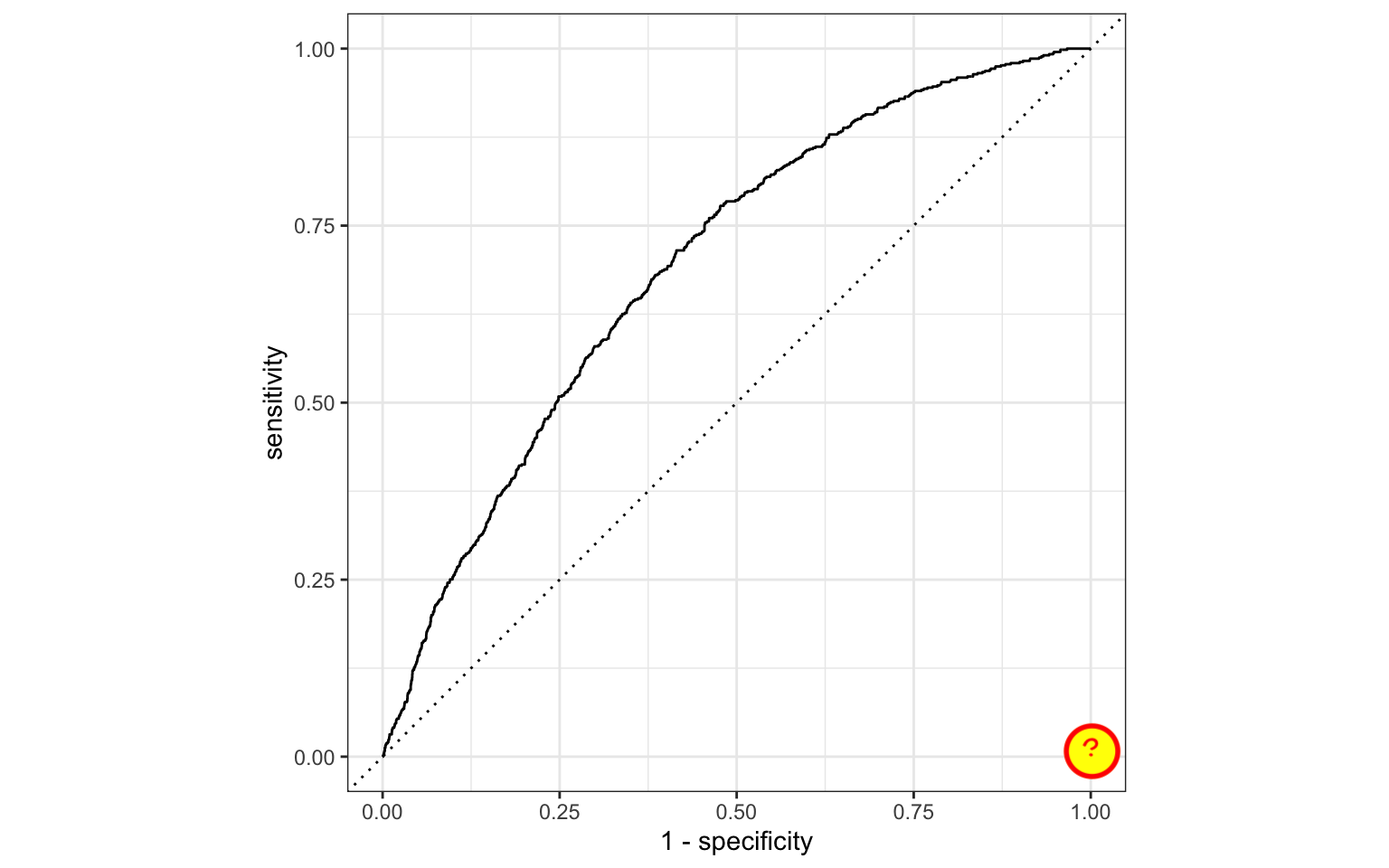

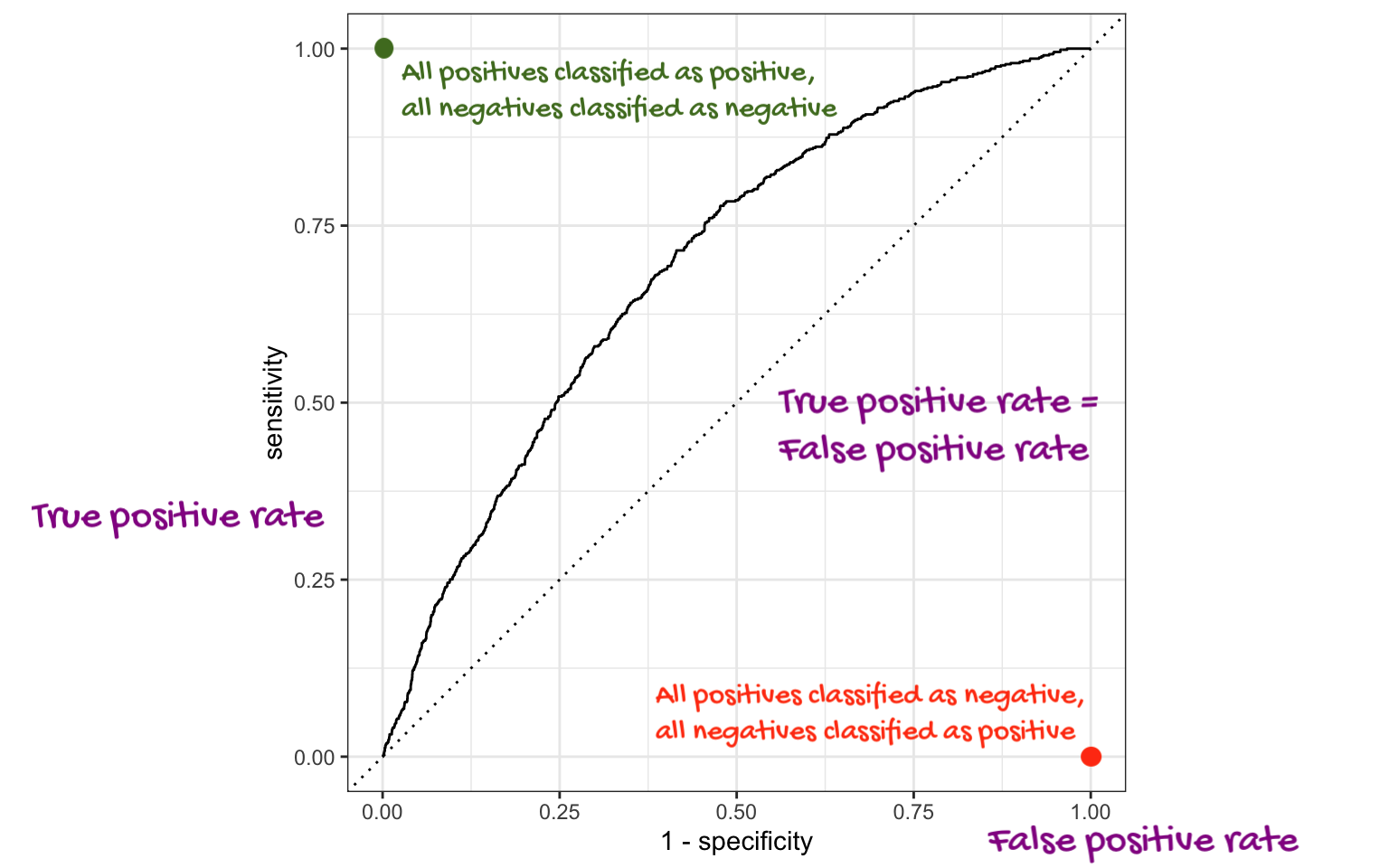

Which of the following best describes the area annotated on the ROC curve?

- Where all positives classified as positive, all negatives classified as negative

- Where true positive rate = false positive rate

- Where all positives classified as negative, all negatives classified as positive

Scan the QR code or go to app.wooclap.com/sta199. Log in with your Duke NetID.

ROC curve

Which corner of the plot indicates the best model performance?

Next steps

ae-14-chicago-taxi-classification

Go to your ae project in RStudio.

If you haven’t yet done so, make sure all of your changes up to this point are committed and pushed, i.e., there’s nothing left in your Git pane.

If you haven’t yet done so, click Pull to get today’s application exercise file: ae-14-chicago-taxi-classification.qmd.

Work through the application exercise in class, and render, commit, and push your edits.

Recap

Split data into training and testing sets (generally 75/25)

Fit models on training data and reduce to a few candidate models

Make predictions on testing data

-

Evaluate predictions on testing data using appropriate predictive performance metrics

- Linear models: Adjusted R-squared, AIC, etc.

- Logistic models: False negative and positive rates, AUC (area under the curve), etc.

Don’t forget to also consider explainability and domain knowledge when selecting a final model

In a future machine learning course: Cross-validation (partitioning training data into training and validation sets and repeating this many times to evaluate model predictive performance before using the testing data), feature engineering, hyperparameter tuning, more complex models (random forests, gradient boosting machines, neural networks, etc.)

Note

We will only learn about a subset of these in this course, but you can go further into these ideas in STA 210 or STA 221 as well as in various machine learning courses.